Energy Efficiency | July 11, 2019

5G? Edge Computing? Liquid Cooling? Questions from CAPRE Boston

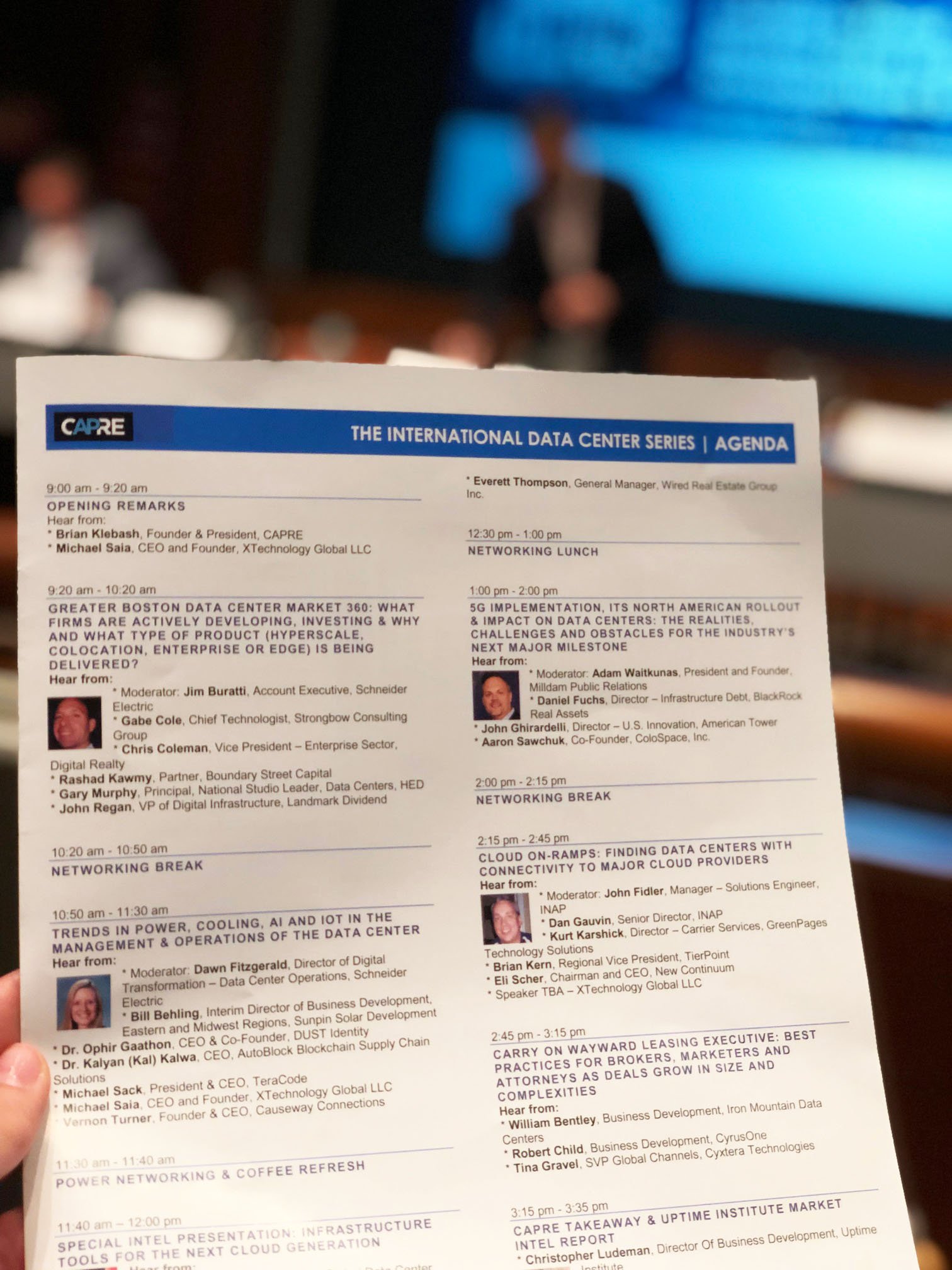

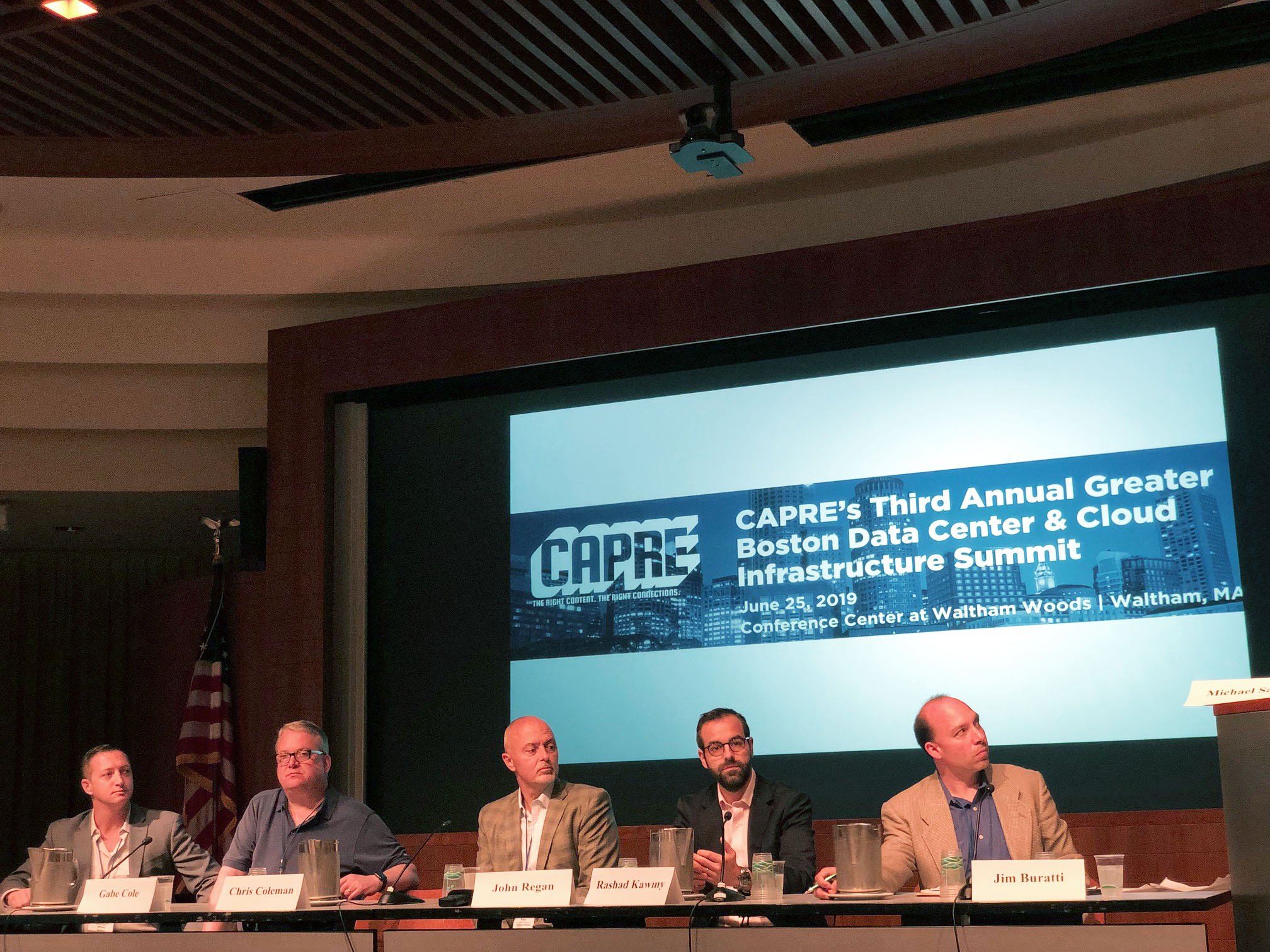

I probably heard the term “5G” mentioned over 50 times at CAPRE’s Boston data center event. A hot topic for the industry, the introduction of 5G networks will impact data centers across the board, from enterprise and colos to facilities and IT. An event developed for the local Boston data center community, CAPRE featured sessions targeting current solutions or issues affecting data centers both locally and nationally. Speakers addressed topics such as the impact of 5G, how liquid cooling may play a role in future operations and whether we’ve hit capacity for new data center builds, among others. Here are some of the insights I took away from the event.

5G and edge computing need to be tackled together

Multiple speakers and moderators discussed two main points: we’re going to need to see (a) how 5G networks are actually deployed and (b) how latency sensitive applications work before we’ll fully understand the demand for edge computing. Currently, Tier 3 and 4 markets are functioning like our edge today, handling needs for PoPs in locations closer to a data usage point. We may eventually need edge PoPs every mile or so but will have to wait and see which applications really need that kind of frequency and in which markets.

Rural Massachusetts and the greater New England area could really benefit from this kind of edge PoP. However, from a provider standpoint, edge means being as close to the end user as possible but deployed in such a way as to make the equipment, computing power and storage worth the cost. One of the panels noted that the business model of the data center won’t necessarily change to accommodate edge computing but we’ll likely see a significant increase in cloud infrastructure and continued growth for colos to handle the rise of 5G and need for edge data centers.

The audience chuckled a bit over comments about how long we’ve been hearing about 5G, when it is coming and why we’re still just talking about this development without concrete applications. When asked about a realistic timeline for 5G, most panelists acknowledged that, in a way, we’re already there in some test areas and we’ll continue to see 4G deployments around country, but 5G is still 2, 5, 10+ years away from full deployment. The change, the general consensus determined, would continue to be gradual where the increase in 4G networks will make it easier to “flip the switch” to 5G in the markets where it will matter. Many people may not actually need 5G, so deployment will be dynamic in that sense – we imagine you’ll be deployed what you need or what you pay for.

Liquid cooling might be the future, but it’s not here yet

One of the targeted discussions of the day focused on liquid cooling. Liquid cooling, a panelist proclaimed, is disrupting the relationship between power, cooling and computing. This hot topic actively considered how colos are struggling to keep up with power and cooling demands and could consider new solutions, like liquid cooling, which can be achieved without mechanical cooling. Our take on the subject is that we see a huge amount of potential for sites to more efficiently use the power and cooling they have without turning to liquid cooling, but we’ll still be interested to see how impactful this technology may become over the next 5-10 years.

Ultimately, this panel and most the audience seemed to agree, liquid cooling isn’t going to take off in a big way until one of “the big guys,” a hyperscale data center, publicly declares use and benefit of liquid cooling. Until then, we recommend reevaluating your current, mechanical, air-based cooling systems.

Are the “Big Guys” too big? Have we hit capacity for data centers?

Speaking of hyperscale, one of the questions many of us have probably considered at one point or another is whether the “big guys” – Google, Amazon, Microsoft – are getting too big. One of the panels at CAPRE brought this up, considering whether additional regulation is needed to constrain the growth of the current data center giants. Without a clear determination what regulation would or could look like, the panel raised another question: would regulation actually close the door for future business, making it ultimately more difficult for smaller companies to enter the marketplace?

In parallel to acknowledging how much data center capacity is currently run by hyperscale players, another question raised at this event focused on over capacity. During the dotcom bubble, tech companies experienced a rush of players to the marketplace that ultimately overdelivered Internet and tech services. The industry maxed out and many companies were forced to downsize or close. This topic considered whether the data center industry is approaching a similar point. Most of the panelists were in agreement: we’re really not, and that’s mostly due to caution and realistic planning.

Capacity is a market by market question and those markets tend to be unique to themselves. Now, new capacity being brought to market isn’t as huge as it used to be and when being built in a new market, typically there’s an anchor tenant. It’s not a shot in the dark. Ashburn, VA may be one of the locations getting close to capacity, but even there, colos or companies have that anchor tenant driving that kind of new build or expansion.

One area with over supply that’s interesting to consider is legacy or second generation colocation. These are the spaces that may become vacant and would need power and cooling upgrades to keep up with the new builds and new use cases today. To continue leasing that kind of space may need investment. This is exactly what Fairbanks Energy Services sees as a “sweet spot,” where energy efficiency and mechanical upgrades can release stranded capacity and improve data center operations for sites that can’t keep up with today’s load demands.

Fundamentally, the panelists agreed, the increase in more devices that generate data and need to store that data is not slowing down. We will continue to need more space. Long term data archival is actually the fastest growing part of many colos’ cloud businesses. This need doesn’t seem to be going anywhere, anytime soon.

Data matters to data centers

Besides managing other people’s data, data centers increasingly rely on their own generated data to influence operational decisions. Jeff Klaus, of Intel, emphasized how important it is to generate, analyze and understand valid information from data center operations. One point he focused on is that power is going to be an ongoing issue. Huge users of energy, data centers can really benefit from granular data about power usage in real time. We need to ensure good data and dozens of data points including input and output data to track the delta.

At Mantis, we regularly educate customers and colleagues about the huge gains available to companies willing to implement or upgrade their BMS systems and ways to track mechanical system performance, not only to reduce the amount of power consumed, but to deliver improved operations, redundancy and capacity to handle a larger load. This, Jeff concluded, is the crossroads of infrastructure management and facilities management. The one should be informing the other.

Related Posts

Discover more content and insights from Mantis Innovation

The Cost of Inaction: Why Businesses Should Act Now on Energy Efficiency

In today's fast-paced business environment, the financial and operational losses businesses incur by delaying energy efficiency improvements, the "cost of inaction," is more relevant than ever.

In today’s AI era, human intelligence is the key to data center facility and energy optimization

Nowhere else in modern industry do artificial and human intelligence converge with such transformative potential as in the world of data centers. As AI's extraordinary growth accelerates demand for

Your Guide to LED Lighting for Business and Commercial Buildings

Never to be underestimated, LED lighting and well-designed lighting retrofits and upgrades offer businesses big improvements like reduced energy costs, reduced emissions, and improved working

Five Trends Driving Data Center Facility Energy Optimization

Today’s digital economy, commercial and industrial digitalization, and the recent explosion in artificial intelligence and machine learning (AI/ML) powered computing are driving massive growth in